Friday, 17 December 2010

Another X'mas present from Infinispan: 4.2.0.FINAL

So Christmas is meant to be full of presents, right? Yep you heard that right - two releases in one day :-) Hot on the heels of Galder's 5.0.0.ALPHA1 release, here's the much-awaited 4.2.0.FINAL. This is a big release. Although it only contains a handful of new features - including ISPN-180 and ISPN-609 - it contains a good number of stability and performance improvements over 4.1.0, a complete list of which is available here. Yes, that is over 75 bugs fixed since 4.1.0!!

This really is thanks to the community, who have worked extremely hard on testing, benchmarking and pushing 4.1.0 - and subsequent betas and release candidates of 4.2 - which has got us here. This really is helping the project to mature very, very fast. And of course the core Infinispan dev team who've pulled some incredible feats to get this release to completion. You know who you are. :-)

I'd also like to reiterate the availability of Maven Archetypes to jump-start your Infinispan project - read all about that here.

So with that, I'd like to leave you with 4.2.0, Ursus, and say that we are full steam ahead with 5.0 Pagoa now. :-) As usual, download 4.2.0 here, read about it here, provide feedback here.

Enjoy, and Happy Holidays!

Manik

Xmas arrives early for Infinispan users! 5.0.0.ALPHA1 is out!

Announcing project Radargun

Radargun is a tool we've developed and used for benchmarking Infinispan's performance both between releases and compared with other similar products. Initially we shipped under the (poorly named) Cache Benchmark Framework.

Due to increase community interest and the fact that this reached a certain maturity (we used it for benchmarking 100+ nodes clusters) we decided to revamp it a little and also come with another name: Radargun.

You can read more about it here. A good start is the 5MinutesTutorial.

Cheers,

Mircea

Tuesday, 14 December 2010

4.2.0.CR4 is out

Details of issues fixed are here, the download is here, and please report issues here. :-)

Enjoy,

Manik

Maven Archetypes

Infinispan gains another team member

Welcome aboard, Pete - we're all very excited to have you on board! :-)

Cheers

Manik

Friday, 3 December 2010

4.2.0.CR3 released

For a full list of changes, see the release notes. As always, download, try out and provide feedback!

Onwards to a final release...

Enjoy

Manik

Wednesday, 1 December 2010

Infinispan and JBoss AS 5.x

While Infinispan can be used as a Hibernate second level cache with Hibernate 3.5 onwards, Bill deCoste has written a guide to getting Infinispan to work in older versions of Hibernate, specifically with JBoss AS 5.x. Hope you find this useful!

Cheers

Manik

Thursday, 25 November 2010

Infinispan 4.2.0.CR2 "Ursus" is out!

CR2 release fixes a critical issue from CR1: NumberFormatException when creating a new GlobalConfiguration objects (ISPN-797). Besides this, the client-server following two issues were fixed around client server modules:

Wednesday, 24 November 2010

Infinispan 4.2.0.CR1 "Ursus" is out!

Tuesday, 9 November 2010

Infinispan Community Meetup @ Devoxx 2010

And a quick note, just because open source is all about Free as in Speech doesn't mean we can't have free beer. Yes, we're sponsoring beer as well as other project-related goodies, but these are limited, first come first served.

Details

Where: Kulminator, close to Groenplaats Station, Antwerp

When: Monday the 15th of November, 2010

What time: 20:00

View Larger Map

Hope to see many of you there!

Cheers

Manik

Thursday, 4 November 2010

Infinispan likes GitHub

Anyway, a quick summary:

- Infinispan sources are no longer in Subversion, on JBoss.org

- The Infinispan Subversion repository is still browseable, in read-only mode!

- The primary repository for Infinispan is now http://github.com/infinispan/infinispan

- Clone this repository at will!

- Contributions should take the form of pull requests on GitHub

- Infinispan's Hudson, release tooling and other systems have been updated to reflect this change

BETA is out!!

The JOPR/RHQ plugin now works in multi cache manager environments (ISPN-675) and thanks to ISPN-754, cache manager instances can be easily identified when using the JOPR/RHQ management GUI. As a result of ISPN-754, JMX object names follow best practices as set up by Sun/Oracle and so this means that object names exported to JMX have changed from this version onwards. See this wiki page for detailed information.

For a list of all fixes since Alpha5, have a look at the release notes in JIRA, and as always, download the release here, and let us know what you think using the user forums.

Onward to release candidate and final release phases! :-)

Enjoy,

Manik

Friday, 29 October 2010

4.2.0.ALPHA5 is out!

Please do give this release a spin and provide as much feedback as you can. A feature-complete beta is imminent and we expect it to be very stable - as far as betas go - and all thanks to your feedback.

Download the release here, and talk to us about it on the forums here.

Enjoy,

Manik

Wednesday, 27 October 2010

Data-as-a-Service: a talk by yours truly

Data-as-a-Service using Infinispan from JBoss Developer on Vimeo.

Enjoy,

Manik

Friday, 22 October 2010

Tuesday, 19 October 2010

Welcome Trustin Lee

Welcome aboard, Trustin! :)

Cheers

Manik

Wednesday, 13 October 2010

Infinispan 4.2Alpha3 "Ursus" is out!

Thursday, 30 September 2010

Want to learn about Infinispan at Devoxx 2010?

I'll be talking about Infinispan as a NoSQL solution, so this should be interesting to anyone trying to build a data service around Infinispan.

Check out the details here. See you in Antwerp!

Cheers

Manik

Friday, 24 September 2010

Infinispan’s been harvested, time to evangelise!

As far as I'm concerned, I'll be at the JAOO conference in Aarhus, Denmark, where I'll be speaking about the importance of the brand new Infinispan Server modules, emphasizing the motivation for developing them and showcasing some really exciting use cases.

Just a few days later I'll be in Berlin for Europe's round for hosting JBoss' JUDCon, a developer conference by developers where I'll be introducing a brand-new, innovative data eviction algorithm included in Infinispan 4.1, which increases eviction precision and reduces overhead. I will also be talking about the Infinispan Server modules in this conference. On top of that, Mircea Markus will be explaining how transactions are handled within Infinispan and how to do continuous querying of Infinispan data grids. In total, Infinispan has 4 talks @ JUDCon which is very exciting for us!!

To round things up, on the 14th of October I'll be in Lausanne where I'll be introducing the audience of the local JUG to Infinispan, talking about our motivations to create Infinispan, how different it is to JBoss Cache...etc.

So, if you happen to be around, make sure you come! It'll be fun :)

Friday, 17 September 2010

4.2.ALPHA2 "Ursus" is out!

JavaOne 2010

The BOF is on Tuesday, at 21:00, titled "A new era for in-memory data grids". Click on the link for more details.

My main conference session is on Wednesday, at 13:00, titled "Measuring Performance and Looking for Bottlenecks in Java-Based Data Grids". This should be a fun and interesting talk!

Further, the Red Hat/JBoss booth in the pavilion will be running a series of "mini-sessions", with a chance to meet and interface with the core R&D folk from JBoss including myself. I'm running a mini-session on building a Data-as-a-Service (DaaS) grid using Infinispan on Tuesday and Wednesday, along with similarly exciting talks by other Red Hatters. More details here.

And finally, the JBoss party. JBoss parties at JavaOne have become something of an institution and you sure wouldn't want to be left out! Details here, make sure you get your invitations early as these always run out fast.

See you in San Francisco!

Cheers

Manik

Thursday, 16 September 2010

Want to become a full-time dev on Infinispan?

- A résumé, in plain text or as a PDF document (or a link to an online résumé)

- Details of your contribution to Infinispan or other JBoss projects

- Including links to JIRAs, changelogs if relevant

- Links to community documentation, wikis, etc that may be relevant

- Details of your contribution to other open source projects

- Including links to issue trackers, source code, etc.

- Links to community documentation, wikis, etc that may be relevant

- Your current time zone

- Working with an extremely active open source community

- Ability to work remotely, communicating via email/IRC

- Work on some of the most exciting tech and leading engineers in the Java landscape today

- Help shape cloud-based data storage for the next generation of applications

Monday, 13 September 2010

Infinispan Refcard

DZone today published a Refcard on Infinispan. For those of you not familiar with DZone's Refcardz, these are quick-lookup "cheat sheets" on various technologies, targeted at developers.

I hope you find the Infinispan Refcard useful. Download it, save it, pass it on to friends. Oh yeah, and tweet about it too - tell the world! :)

Cheers

Manik

Tuesday, 7 September 2010

4.2.ALPHA1 "Ursus" is out!

4.2.ALPHA1 has just been released!

Besides other things, it contains following two features:

- supports deadlock detection for eagerly locking transactions (new)

- an very interesting optimisation for eager locking, which allows one to benefit from eager locking semantics with the same performance as "lazy" locking. You can read more about this here.

For download information go here. For a detailed list of features refer to the release notes.

Thursday, 2 September 2010

4.1.0.FINAL is out - and announcing 4.2.0

This is a very important release. If you are using 4.0.0 (Starobrno), I strongly recommend upgrading to Radegast as we have a whole host of big fixes, performance improvements and new features for you. A full changelog is available on JIRA, but a few key features to note are server endpoints, a Java-based client for the Hot Rod protocol, and the new LIRS eviction algorithm.

Download it, give it a go, and talk about it on the forums. Tell your friends about it, tweet about it.

The other interesting piece of news is the announcement of a 4.2.0 release. We've decided to take a few key new features from 5.0.0 and release them earlier, as 4.2.0 - codenamed Ursus. If you are interested in what's going to be in Ursus, have a look at this feature set, and expect a beta on Ursus pretty soon now!

Enjoy!

Manik

Wednesday, 18 August 2010

Radegast ever closer to a final release - CR3 is released.

There has been a lot of activity in the Infinispan community over the last 3 weeks, with lots of people putting CR2 through its paces, and reporting everything from the trivial through to the critical. This is awesome stuff, folks - keep it up!

This release has fixed a whole bunch of things you guys have reported. Many thanks to Galder, Mircea, Vladimir and Sanne, working hard around vacations to get this release out.

Detailed release note reports are on JIRA, and it can be downloaded in the usual place. Use the forums and report stuff - push this as hard as you've been pushing CR2 and we will have that rock-solid final release we all want!

Enjoy!

Manik

Tuesday, 20 July 2010

Infinispan 4.1.0 "Radegast" 2nd release candidate just released!

Manik

Tuesday, 6 July 2010

Infinispan 4.1.0.CR1 is now available!

- An fantastic demo showing how to run Infinispan in EC2. Check Noel O'Connor's blog last month for more detailed information.

- Enable Hot Rod servers to run behind a proxy in environments such as EC2, and make TCP buffers and TCP no delay flag configurable for both the server and client.

- Important performance improvements for Infinispan based Lucene directory and Hot Rod client and sever.

- To avoid confusion, the single jar distribution has been removed. The two remaining distributions are: The bin distribution containing the Infinispan modules and documentation, and the all distribution which adds demos on top of that.

Monday, 28 June 2010

JBossWorld and JUDCon post-mortem

The first-ever JUDCon, the developer conference that took place the day before JBoss World and Red Hat Summit, was great and I look forward to future JUDCons around the world. Pics from the first-ever JUDCon are now online, along with some video interviews with Jason Greene and Pete Muir.

Some of the great presentations at JUDCon include Galder Zamarreño's talk on Infinispan's Hot Rod protocol (slides here) and a talk I did with Mircea Markus on the cache benchmarking framework and benchmarking Infinispan (slides here).

JBoss World/Red Hat Summit was also very interesting. There is clearly a lot of excitement around Infinispan, and we heard about interesting deployments and use cases, lots of ideas and thoughts for further improvement from customers, contributors and partners.

From JBoss World, there were three talks on Infinispan, including Storing Data on Cloud Infrastructure in a Scalable, Durable Manner which I presented along with Mircea Markus (slides), Why RESTful Design for the Cloud is Best by Galder Zamarreño (slides) and Using Infinispan for High Availability, Load Balancing, & Extreme Performance which I presented along with Galder Zamarreño (slides).

In addition to the slides, the first talk was even recorded so if you missed it, you can watch it below:

Further, Infinispan was showcased on Red Hat CTO Brian Stevens' keynote speech (about 28:15 into the video) where Brian talks about data grids and their importance, and I demonstrate Infinispan.

We even had an open roadmap and design session for Infinispan 5.0, which included not just core Infinispan engineers, but contributors, end-users and anyone who had any sort of interest. I'll post again later with details of 5.0 and what our plans for it will be.

For those of you who couldn't make it to JUDCon and JBoss World, hope the slides and videos on this post will help give you an idea of what went on.

Cheers

Manik

Wednesday, 23 June 2010

JUDCon and the JBoss Community Awards

The JBoss Community Recognition Award winners were also announced at JUDCon, and I was really surprised to find that 4 of the 5 winners were Infinispan contributors. Sanne Grinovero, Alex Kluge, Phil van Dyck and Amin Abbaspour - thanks for your participation in Infinispan, your peers have recognised your contributions and have voted with mouse clicks! Congrats!

Given how many Infinispan engineers and contributors are at JBoss World this week, we are having an open Infinispan 5.0 planning and roadmap session. So if you are around and would like to join in, this will be at 4:00pm on Thursday, in Campground 1. For those of you not able to make it, discussions will continue via the usual channels of IRC and the developer mailing list.

Now to prepare for my next talk ... :-)

Cheers

Manik

Friday, 28 May 2010

Infinispan 4.1Beta2 released

The second and hopefully last Beta for 4.1 has just been released. Thanks to excellent community feedback, several HotRod client/server issues were fixed. Besides this and other bug-fixes (check this for complete list), following new features were added:

- an key affinity service that generates keys to be distributed to specific nodes

- RemoteCacheStore that allow an Infinspan cluster to be used as an remote data store

Enjoy!

Mircea

Tuesday, 25 May 2010

Infinispan EC2 Demo

Infinispan's distributed mode is well suited to handling large datasets and scaling the clustered cache by adding nodes as required. These days when inexpensive scaling is thought of, cloud computing immediately comes to mind.

One of the largest providers of cloud computing is Amazon with its Amazon Web Services (AWS) offering. AWS provides computing capacity on demand with its EC2 services and storage on demand with its S3 and EBS offerings. EC2 provides just an operating system to run on and it is a relatively straightforward process to get an Infinispan cluster running on EC2. However there is one gotcha, EC2 does not support UDP multicasting at this time and this is the default node discovery approach used by Infinispan to detect nodes running in a cluster.

Some background on network communications

Infinispan uses the JGroups library to handle all network communications. JGroups enables cluster node detection, a process called discovery, and reliable data transfer between nodes. JGroups also handles the process of nodes entering and exiting the cluster and master node determination for the cluster.

Configuring JGroups in Infinispan

The JGroups configuration details are passed to Infinispan in the infinispan configuration file

<transport clusterName="infinispan-cluster" distributedSyncTimeout="50000"Node Discovery

transportClass="org.infinispan.remoting.transport.jgroups.JGroupsTransport">

<properties>

<property name="configurationFile" value="jgroups-s3_ping-aws.xml" />

</properties>

</transport>

JGroups has three discovery options which can be used for node discovery on EC2.

The first is to statically configure the address of each node in the cluster in each of the nodes peers. This simplifies discovery but is not suitable when the IP addresses of each node is dynamic or nodes are added and removed on demand.

The second method is to use a Gossip Router. This is an external Java process which runs and waits for connections from potential cluster nodes. Each node in the cluster needs to be configured with the ip address and port that the Gossip Router is listening on. At node initialization, the node connects to the gossip router and retrieves the list of other nodes in the cluster.

Example JGroups gossip router configuration

<config>

<TCP bind_port="7800" />

<TCPGOSSIP timeout="3000" initial_hosts="192.168.1.20[12000]"

num_initial_members="3" />

<MERGE2 max_interval="30000" min_interval="10000" />

<FD_SOCK start_port="9777" />

...

</config>

The infinispan-4.1.0-SNAPSHOT/etc/config-samples/ directory has sample configuration files for use with the Gossip Router. The approach works well but the dependency on an external process can be limiting.

The third method is to use the new S3_PING protocol that has been added to JGroups. Using this the user configures a S3 bucket (location) where each node in the cluster will store its connection details and upon startup each node will see the other nodes in the cluster. This avoids having to have a separate process for node discovery and gets around the static configuration of nodes.

Example JGroups configuration using the S3_PING protocol:

<config>

<TCP bind_port="7800" />

<S3_PING secret_access_key="secretaccess_key" access_key="access_key"

location=s3_bucket_location" />

<MERGE2 max_interval="30000" min_interval="10000" />

<FD_SOCK start_port="9777" />

...

</config>

EC2 demo

The purpose of this demo is to show how an Infinispan cache running on EC2 can easily form a cluster and retrieve data seamlessly across the nodes in the cluster. The addition of any subsequent Infinispan nodes to the cluster automatically distribute the existing data and offer higher availability in the case of node failure.

To demonstrate Infinispan, data is required to be added to nodes in the cluster. We will use one of the many public datasets that Amazon host on AWS, the influenza virus dataset publicly made available by Amazon.

This dataset has a number components which make it suitable for the demo. First of all it is not a trivial dataset, there are over 200,000 records. Secondly there are internal relationships within the data which can be used to demonstrate retrieving data from different cache nodes. The data is made up for viruses, nucleotides and proteins, each influenza virus has a related nucleotide and each nucleotide has one or more proteins. Each are stored in their own cache instance.

The caches are populated as follows :

- InfluenzaCache - populated with data read from the Influenza.dat file, approx 82,000 entries

- ProteinCache - populated with data read from the Influenza_aa.dat file, approx 102,000 entries

- NucleotideCache - populated with data read from the Influenza_na.dat file, approx 82,000 entries

The demo requires 4 small EC2 instances running Linux, one instance for each cache node and one for the Jboss application server which runs the UI. Each node has to have Sun JDK 1.6 installed in order to run the demos. In order to use the Web-based GUI, JBoss AS 5 should also be installed on one node.

In order for the nodes to communicate with each other the EC2 firewall needs to be modified. Each node should have the following ports open:

- TCP 22 – For SSH access

- TCP 7800 to TCP 7810 – used for JGroups cluster communications

- TCP 8080 – Only required for the node running the AS5 instance in order to access the Web UI.

- TCP 9777 - Required for FD_SOCK, the socket based failure detection module of the JGroups stack.

To run the demo, download the Infinispan "all" distribution, (infinispan-xxx-all.zip) to a directory on each node and unzip the archive.

Edit the etc/config-samples/ec2-demo/jgroups-s3_ping-aws.xml file to add the correct AWS S3 security credentials and bucket name.

Start the one of the cache instances on each node using one of the following scripts from the bin directory:

- runEC2Demo-influenza.sh

- runEC2Demo-nucleotide.sh

- runEC2Demo-protein.sh

Each script will startup and display the following information :

[tmp\] ./runEC2Demo-nucleotide.shCacheBuilder called with /opt/infinispan-4.1.0-SNAPSHOT/etc/config-samples/ec2-demo/infinispan-ec2-config.xml

-------------------------------------------------------------------

GMS: address=redlappie-37477, cluster=infinispan-cluster, physical address=192.168.122.1:7800

-------------------------------------------------------------------

Caches created....

Starting CacheManagerCache

Address=redlappie-57930Cache

Address=redlappie-37477Cache

Address=redlappie-18122

Parsing files....Parsing [/opt/infinispan-4.1.0-SNAPSHOT/etc/Amazon-TestData/influenza_na.dat]

About to load 81904 nucleotide elements into NucleiodCache

Added 5000 Nucleotide records

Added 10000 Nucleotide records

Added 15000 Nucleotide records

Added 20000 Nucleotide records

Added 25000 Nucleotide records

Added 30000 Nucleotide records

Added 35000 Nucleotide records

Added 40000 Nucleotide records

Added 45000 Nucleotide records

Added 50000 Nucleotide records

Added 55000 Nucleotide records

Added 60000 Nucleotide records

Added 65000 Nucleotide records

Added 70000 Nucleotide records

Added 75000 Nucleotide records

Added 80000 Nucleotide records

Loaded 81904 nucleotide elements into NucleotidCache

Parsing files....Done

Protein/Influenza/Nucleotide Cache Size-->9572/10000/81904

Protein/Influenza/Nucleotide Cache Size-->9572/20000/81904

Protein/Influenza/Nucleotide Cache Size-->9572/81904/81904

Protein/Influenza/Nucleotide Cache Size-->9572/81904/81904

Items of interest in the output are the Cache Address lines which display the address of the nodes in the cluster. Also of note is the Protein/Influenza/Nucleotide line which displays the number of entries in each cache. As other caches are starting up these numbers will change as cache entries are dynamically moved around through out the Infinispan cluster.

To use the web based UI we first of all need to let the server know where the Infinispan configuration files are kept. To do this edit the jboss-5.1.0.GA/bin/run.conf file and add the line

JAVA_OPTS="$JAVA_OPTS -DCFGPath=/opt/infinispan-4.1.0-SNAPSHOT/etc/config-samples/ec2-demo/"at the bottom. Replace the path as appropriate.

Now start the Jboss application server using the default profile e.g. run.sh -c default -b xxx.xxx.xxx.xxx, where “xxx.xxx.xxx.xxx” is the public IP address of the node that the AS is running on.

Then drop the infinispan-ec2-demoui.war into the jboss-5.1.0.GA /server/default/deploy directory.

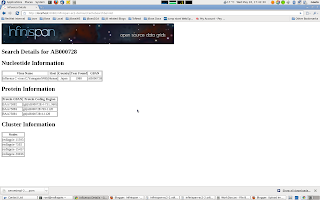

Finally point your web browser to http://public-ip-address:8080/infinispan-ec2-demoui and the following page will appear.

The search criteria is the values in the first column of the /etc/Amazon-TestData/influenza.dat file e.g. AB000604, AB000612, etc.

Note that this demo will be available in Infinispan 4.1.0.BETA2 onwards. If you are impatient, you can always build it yourself from Infinispan's source code repository.

Enjoy,

Noel

Thursday, 13 May 2010

Client/Server architectures strike back, Infinispan 4.1.0.Beta1 is out!

A detailed change log is available and the release is downloadable from the usual place.

For the rest of the blog post, we’d like to share some of the objectives of Infinispan 4.1 with the community. Here at ‘chez Infinispan’ we’ve been repeating the same story over and over again: ‘Memory is the new Disk, Disk is the new Tape’ and this release is yet another step to educate the community on this fact. Client/Server architectures based around Infinispan data grids are key to enabling this reality but in case you might be wondering, why would someone use Infinispan in a client/server mode compared to using it as peer-to-peer (p2p) mode? How does the client/server architecture enable memory to become the new disk?

Broadly speaking, there three areas where a Infinispan client/server architecture might be chosen over p2p one:

Infinispan’s roots can be traced back to JBoss Cache, a caching library developed to provide J2EE application servers with data replication. As such, the primary way of accessing Infinispan or JBoss Cache has always been via direct calls coming from the same JVM. However, as we have repeated it before, Infinispan’s goal is to provide much more than that, it aims to provide data grid access to any software application that you can think of and this obviously requires Infinispan to enable access from non-Java environments.

Infinispan comes with a series of server modules that enable that precisely. All you have to do is decide which API suits your environment best. Do you want to enable access direct access to Infinispan via HTTP? Just use our REST or WebSocket modules. Or is it the case that you’re looking to expand the capabilities of your Memcached based applications? Start an Infinispan-backed Memcached server and your existing Memcached clients will be able to talk to it immediately. Or maybe even you’re interested in accessing Infinispan via Hot Rod, our new, highly efficient binary protocol which supports smart-clients? Then, gives us a hand developing non-Java clients that can talk the Hot Rod protocol! :).

2. Infinispan as a dedicated data tier

Quite often applications running running a p2p environment have caching requirements larger than the heap size in which case it makes a lot of sense to separate caching into a separate dedicated tier.

It’s also very common to find businesses with varying work loads overtime where there’s a need to start business processing servers to deal with increased load, or stop them when work load is reduced to lower power consumption. When Infinispan data grid instances are deployed alongside business processing servers, starting/stopping these can be a slow process due to state transfer, or rehashing, particularly when large data sets are used. Separating Infinispan into a dedicated tier provides faster and more predictable server start/stop procedures – ideal for modern cloud-based deployments where elasticity in your application tier is important.

It’s common knowledge that optimizations for large memory usage systems compared to optimizations for CPU intensive systems are very different. If you mix both your data grid and business logic under the same roof, finding a balanced set of optimizations that keeps both sides happy is difficult. Once again, separating the data and business tiers can alleviate this problem.

You might be wondering that if Infinispan is moved to a separate tier, access to data now requires a network call and hence will hurt your performance in terms of time per call. However, separating tiers gives you a much more scalable architecture and your data is never more than 1 network call away. Even if the dedicated Infinispan data grid is configured with distribution, a Hot Rod smart-client implementation - such as the Java reference implementation shipped with Infinispan 4.1.0.BETA1 - can determine where a particular key is located and hit a server that contains it directly.

3. Data-as-a-Service (DaaS)

Increasingly, we see scenarios where environments host a multitude of applications that share the need for data storage, for example in Plattform-as-a-Service (PaaS) cloud-style environments (whether public or internal). In such configurations, you don’t want to be launching a data grid per each application since it’d be a nightmare to maintain – not to mention resource-wasteful. Instead you want deployments or applications to start processing as soon as possible. In these cases, it’d make a lot of sense to keep a pool of Infinispan data grid nodes acting as a shared storage tier. Isolated cache access could easily achieved by making sure each application uses a different cache name (i.e. the application name could be used as cache name). This can easily achieved with protocols such as Hot Rod where each operation requires a cache name to be provided.

Regardless of the scenarios explained above, there’re some common benefits to separating an Infinispan data grid from the business logic that accesses it. In fact, these are very similar to the benefits achieved when application servers and databases don’t run under the same roof. By separating the layers, you can manage each layer independently, which means that adding/removing nodes, maintenance, upgrades...etc can be handled independently. In other words, if you wanna upgrade your application server or servlet container, you don’t need to bring down your data layer.

All of this is available to you now, but the story does not end here. Bearing in mind that these client/server modules are based around reliable TCP/IP, using Netty, the fast and reliable NIO library, they could also in the future form the base of new functionality. For example, client/server modules could be linked together to connect geographically separated Infinispan data grids and enable different disaster recovery strategies.

So, download Infinispan 4.1.0.BETA1 right away, give a try to these new modules and let us know your thoughts.

Wednesday, 28 April 2010

Infinispan WebSocket Server

I just committed a first cut of the new Infinispan WebSocket Server to Subversion.

You get a very simple Cache object in your web page Javascript that supports:

- put/get/remove operations on your Infinispan Cache.

- notify/unnotify mechanism through which your web page can manage Cache entry update notifications, pushed to the browser.

Friday, 23 April 2010

4.1.0. ALPHA3 is out

A detailed changelog is available. The release is downloadable in the usual place.

If you use Maven, please note, we now use the new JBoss Nexus-based Maven repository. The Maven coordinates for Infinispan are still the same (groud id org.infinispan, artifact id infinispan-core, etc) but the repository you need to point to has changed. Setting up your Maven settings.xml is described here.

Enjoy!

Manik

Tuesday, 13 April 2010

Boston, are you ready for Infinispan?

http://summitblog.redhat.com/2010/04/12/boston-are-you-ready-for-infinispan/

Look forward to seeing you there!

Manik

Tuesday, 6 April 2010

Infinispan 4.1Alpha2 is out!

Besides, Infinispan 4.1.0.Alpha2 is the first release to feature the new LIRS eviction policy and the new eviction design that batches updates, which in combination should provide users with more efficient and accurate eviction functionality.

Another cool feature added in this release is GridFileSystem: a new, experimental API that exposes an Infinispan-backed data grid as a file system. Specifically, the API works as an extension to the JDK's File, InputStream and OutputStream classes. You can read more on GridFileSystem here.

Finally, you can find the API docs for 4.1.0.Alpha2 here and again, please consider this an unstable release that is meant to gather feedback on the Hot Rod client/server modules and the new eviction design.

Cheers,

Galder & Mircea

Tuesday, 30 March 2010

Infinispan eviction, batching updates and LIRS

Friday, 12 March 2010

No time to rest, 4.1.0.Alpha1 is here!

This new module enables you to use Infinispan as a replacement for any of your Memcached servers with the added bonus that Infinispan's Memcached server module allows you to start several instances forming a cluster so that they replicate, invalidate or distribute data between these instances, a feature not present in default Memcached implementation.

On top of the clustering capabilities Infinispan memcached server module gets in-built eviction, cache store support, JMX/Jopr monitoring etc... for free.

To get started, first download Infinispan 4.1.0.Alpha1. Then, go to "Using Infinispan Memcached Server" wiki and follow the instructions there. If you're interested in finding out how to set up multiple Infinispan memcached servers in a cluster, head to "Talking To Infinispan Memcached Servers From Non-Java Clients" wiki where you'll also find out how to access our Memcached implementation from non-Java clients.

Finally, you can find the API docs for 4.1.0.Alpha 1 here and note that this is an unstable release that is meant to gather feedback on the Memcached server module as early as possible.

Cheers,

Galder

Tuesday, 23 February 2010

Infinispan 4.0.0.Final has landed!

It is with great pleasure that I'd like to announce the availability of the final release of Infinispan 4.0.0. Infinispan is an open source, Java-based data grid platform that I first announced last April, and since then the codebase has been through a series of alpha and beta releases, and most recently 4 release candidates which generated a lot of community feedback.

It is with great pleasure that I'd like to announce the availability of the final release of Infinispan 4.0.0. Infinispan is an open source, Java-based data grid platform that I first announced last April, and since then the codebase has been through a series of alpha and beta releases, and most recently 4 release candidates which generated a lot of community feedback.Here are some simple charts, generated using the framework. The first set compare Infinispan against the latest and greatest JBoss Cache release (3.2.2.GA at this time), using both synchronous and asynchronous replication. But first, a little bit about the nodes in our test lab, comprising of a large number of nodes, each with the following configuration:

- 2 x Intel Xeon E5530 2.40 GHz quad core, hyperthreaded processors (= 16 hardware threads per node)

- 12GB memory per node, although the JVM heaps are limited at 2GB

- RHEL 5.4 with Sun 64-bit JDK 1.6.0_18

- InfiniBand connectivity between nodes

- Run from 2 to 12 nodes in increments of 2

- 25 worker threads per node

- Writing 1kb of state (randomly generated Strings) each time, with a 20% write percentage

| Reads | Writes | |

| Synchronous Replication |  |  |

| Asynchronous Replication |  |  |

- Run from 4 to 48 nodes, in increments of 4 (to better demonstrate linear scalability)

- 25 worker threads per node

- Writing 1kb of state (randomly generated Strings) each time, with a 20% write percentage

As you can see, Infinispan scales linearly as the node count increases. The different configurations tested, lazy stands for enabling lazy unmarshalling, which allows for state to be stored in Infinispan as byte arrays rather than deserialized objects. This has certain advantages for certain access patterns, for example where remote lookups are very common and local lookups are rare.

How does Infinispan comparing against ${POPULAR_PROPRIETARY_DATAGRID_PRODUCT}?

And just because we cannot publish such results, that does not mean that you cannot run such comparisons yourself. The Cache Benchmark Framework has support for different data grid products, including Oracle Coherence, and more can be added easily.

Aren't statistics just lies?

We strongly recommend you running the benchmarks yourself. Not only does this prove things for yourself, but also allows you to benchmark behaviour on your specific hardware infrastructure, using the specific configurations you'd use in real-life, and with your specific access patterns.